Disclaimer: Information found on CryptoreNews is those of writers quoted. It does not represent the opinions of CryptoreNews on whether to sell, buy or hold any investments. You are advised to conduct your own research before making any investment decisions. Use provided information at your own risk.

CryptoreNews covers fintech, blockchain and Bitcoin bringing you the latest crypto news and analyses on the future of money.

ChatGPT exhibits regional biases regarding environmental justice matters: Study

Virginia Tech, an educational institution in the United States, has released a report highlighting possible biases in the artificial intelligence (AI) tool ChatGPT, indicating discrepancies in its responses concerning environmental justice matters among various counties.

In a recent study, researchers from Virginia Tech claimed that ChatGPT struggles to provide localized information related to environmental justice issues.

Nevertheless, the research revealed a pattern showing that information was more accessible in larger, more densely populated states.

“In states with substantial urban populations like Delaware or California, less than 1 percent of the population resided in counties unable to obtain specific information.”

Conversely, areas with smaller populations faced a lack of similar access.

“In rural states such as Idaho and New Hampshire, over 90 percent of the population lived in counties that could not access local-specific information,” the report indicated.

It also referenced a lecturer named Kim from Virginia Tech’s Department of Geography, who emphasized the importance of additional research as biases continue to be uncovered.

“While further investigation is required, our results indicate that geographic biases presently exist within the ChatGPT model,” Kim stated.

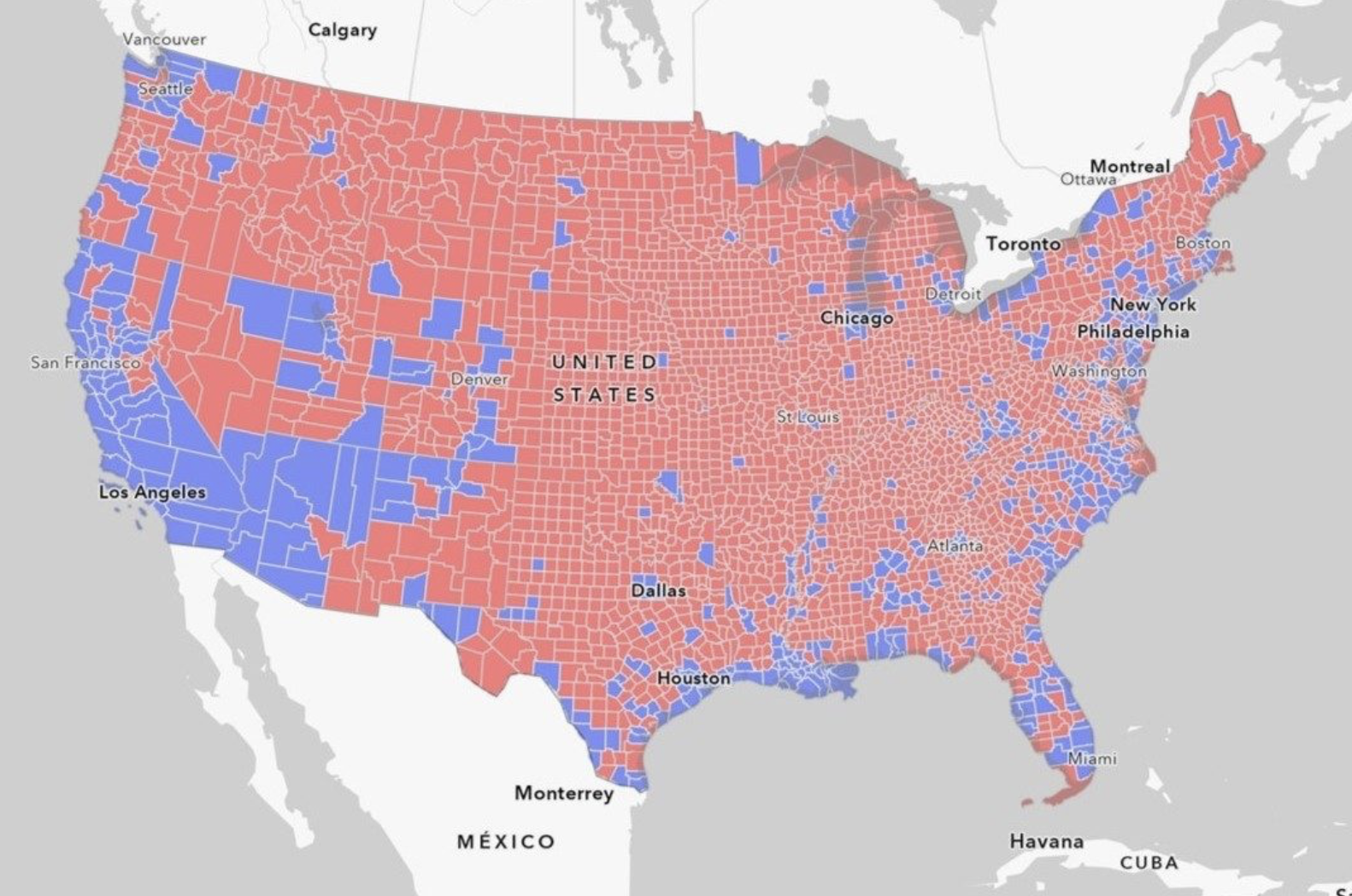

The research paper also featured a map depicting the extent of the U.S. population lacking access to location-specific information on environmental justice topics.

A United States map illustrating areas where residents can access (blue) or cannot access (red) local-specific information on environmental justice issues. Source: Virginia Tech

A United States map illustrating areas where residents can access (blue) or cannot access (red) local-specific information on environmental justice issues. Source: Virginia Tech

Related: ChatGPT passes neurology exam for first time

This comes in light of recent reports that researchers are uncovering potential political biases present in ChatGPT.

On August 25, Cointelegraph reported that scholars from the United Kingdom and Brazil published a study asserting that large language models (LLMs) like ChatGPT produce text containing inaccuracies and biases that could mislead readers and have the capacity to propagate political biases reflected by traditional media.

Magazine: Deepfake K-Pop porn, woke Grok, ‘OpenAI has a problem,’ Fetch.AI: AI Eye