Disclaimer: Information found on CryptoreNews is those of writers quoted. It does not represent the opinions of CryptoreNews on whether to sell, buy or hold any investments. You are advised to conduct your own research before making any investment decisions. Use provided information at your own risk.

CryptoreNews covers fintech, blockchain and Bitcoin bringing you the latest crypto news and analyses on the future of money.

AI versus AI: How Emerging Technologies Are Fighting Advanced Cryptocurrency Scams

Fraudulent schemes constitute the predominant form of illegal activity within the cryptocurrency arena. Research conducted by the Federal Bureau of Investigation (FBI) indicates that American citizens incurred losses totaling $9.3 billion due to crypto scams in the past year.

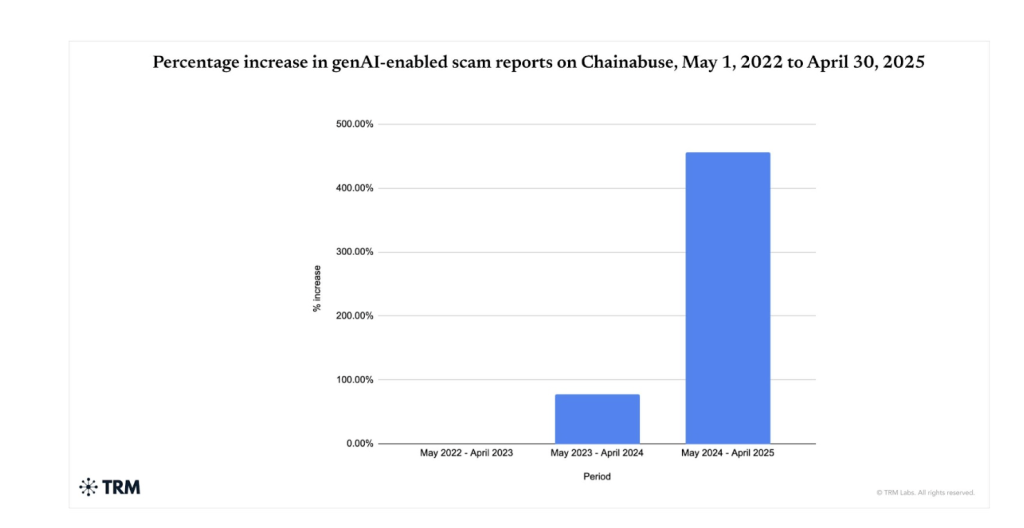

The emergence of artificial intelligence (AI) has exacerbated the issue. A report from the blockchain analytics firm TRM Labs reveals a staggering 456% rise in AI-assisted scams in 2024 compared to prior years.

Source: TRM Labs

Source: TRM Labs

As generative AI (GenAI) progresses, malicious entities can now utilize advanced chatbots, deepfake videos, imitation voices, and automated networks of scam tokens on an unprecedented scale. Consequently, cryptocurrency fraud has evolved from a human-centric operation to one that is algorithm-driven, rapid, adaptable, and increasingly persuasive.

NEW: @zachxbt ALERTS ABOUT A PHONY HYPERLIQUID APP ON THE GOOGLE PLAY STORE pic.twitter.com/yTHQzWDgaK

— DEGEN NEWS (@DegenerateNews) November 7, 2025

Scams Accelerating at Breakneck Speed

Ari Redbord, the global head of policy and government relations at TRM Labs, informed Cryptonews that generative models facilitate the simultaneous launch of thousands of scams. “We are witnessing a criminal ecosystem that is more intelligent, faster, and infinitely expandable,” he remarked.

Redbord clarified that GenAI models can adapt to a victim’s language, geographical location, and digital footprint. For example, he pointed out that in ransomware cases, AI is employed to identify victims most likely to comply, draft ransom requests, and automate negotiation discussions.

In the realm of social engineering, Redbord highlighted that deepfake audio and video are being exploited to deceive individuals and organizations through “executive impersonation” and “family emergency” scams.

Moreover, on-chain scams utilizing AI-generated scripts can transfer assets across numerous wallets in mere seconds, laundering money at a speed unachievable by any human.

AI-Driven Countermeasures

The cryptocurrency sector is increasingly adopting AI-driven defenses to counter these scams. Blockchain analytics companies, cybersecurity firms, exchanges, and academic researchers are developing machine-learning systems aimed at detecting, flagging, and preventing fraud well before victims incur losses.

For instance, Redbord mentioned that artificial intelligence is integrated into every facet of TRM Labs’ blockchain intelligence platform. The company employs machine learning to analyze trillions of data points across over 40 blockchain networks. This enables TRM Labs to map wallet networks, identify patterns, and reveal unusual behavior indicative of possible illegal activities.

“These systems do not merely identify patterns—they learn them. As the data evolves, so do the models, adjusting to the ever-changing landscape of crypto markets,” Redbord noted.

This capability allows TRM Labs to detect what human investigators might otherwise overlook—numerous small, seemingly unrelated transactions that together signify a scam, money laundering network, or ransomware operation.

The AI risk platform Sardine is adopting a similar strategy. Established in 2020, Sardine emerged during a period when major cryptocurrency scams were just starting to surface.

Alex Kushnir, Sardine’s head of commercial development, shared with Cryptonews that the company’s AI fraud detection comprises three layers.

“Data is fundamental to everything we undertake. We capture intricate signals from every user session occurring on financial platforms such as crypto exchanges—such as device characteristics, whether applications have been altered, or how a user is behaving. Additionally, we leverage a broad network of reliable data providers for any user inputs. Finally, we utilize our consortium data—which may be paramount for combatting fraud—allowing companies to share information regarding malicious actors with one another.”

Kushnir emphasized that Sardine employs a real-time risk engine to act on each of the indicators mentioned above to thwart scams as they unfold.

Kushnir also noted that currently, agentic AI and large language models (LLMs) are primarily used for automation and efficiency rather than immediate fraud detection.

“Rather than hard-coding fraud detection parameters, anyone can now simply articulate what they desire a rule to evaluate, and an AI agent will construct, test, and deploy that rule if it aligns with their criteria. The AI agents can even proactively suggest rules based on emerging trends. However, when it comes to assessing risk, machine learning remains the gold standard,” he stated.

Source: Sardine

Source: Sardine

AI Versus AI Use Cases

These innovations are already demonstrating their effectiveness.

Matt Vega, Sardine’s chief of staff, informed Cryptonews that once Sardine identifies a pattern, the firm’s AI conducts an in-depth analysis to uncover trend recommendations to avert an attack vector from materializing.

“This would typically require a human a full day to accomplish, but with AI, it takes mere seconds,” he remarked.

For example, Vega elaborated that Sardine collaborates closely with leading cryptocurrency exchanges to identify unusual user behavior. User transactions are processed through Sardine’s decision platform, with AI analysis assisting in determining the outcomes of these transactions, providing exchanges with advanced notifications.

A blog post from TRM Labs further elaborates that in May, the firm experienced a live deepfake during a video call with a suspected financial grooming scammer. This type of scammer establishes a long-term, trusting, and often emotional or romantic relationship with a victim to access their funds.

“We suspected this scammer was employing deepfake technology due to the individual’s unnatural-looking hairline,” Redbord explained. “AI detection tools allowed us to support our assessment that the image was likely AI-generated.”

Although TRM Labs succeeded in this instance, this particular scam and others like it have amassed around $60 million from unsuspecting victims.

Cybersecurity firm Kidas is also harnessing AI to detect and thwart scams. Ron Kerbs, founder and CEO of Kidas, told Cryptonews that as AI-enabled scams have surged, Kidas’ proprietary models can now analyze content, behavior, and audiovisual discrepancies in real-time to identify deepfakes and LLM-generated phishing during the point of interaction.

“This enables immediate risk scoring and real-time intervention, which is essential for counteracting automated, large-scale fraud operations,” Kerbs stated.

Kerbs added that just this past week, Kidas’ tool successfully intercepted two separate crypto scam attempts on Discord.

“This rapid detection demonstrates the tool’s vital real-time behavioral analytics capability, effectively preventing the compromise of user accounts and potential financial loss,” he remarked.

Safeguarding Against AI-Enabled Scams

While it is evident that AI-driven tools are being employed to identify and prevent sophisticated scams, these attacks are projected to escalate.

“AI is diminishing the barriers to entry for complex crimes, making these scams highly scalable and personalized, hence they will undoubtedly gain more traction,” Kerbs noted.

Kerbs anticipates that semi-autonomous malicious AI entities will soon be capable of orchestrating entire attack campaigns with minimal human supervision, employing untraceable voice-to-voice deepfake impersonation during live calls.

Though concerning, Vega emphasized that there are specific measures users can adopt to avoid becoming victims of such scams.

For instance, he pointed out that numerous attack vectors involve spoofed websites, which users may ultimately visit and then click on fraudulent links.

“Users should be vigilant for Greek alphabet letters on websites. Recently, American multinational technology corporation Apple was deceived by an attacker who created a counterfeit website using a Greek ‘A’ letter in Apple. Users should also steer clear of sponsored links and remain attentive to URLs.”

Moreover, companies like Sardine and TRM Labs are closely collaborating with regulators to determine how to develop safeguards that utilize AI to mitigate the risks posed by AI-driven scams.

“We are creating systems that provide law enforcement and compliance professionals with the same speed, scale, and reach that criminals currently possess—from detecting real-time anomalies to identifying coordinated cross-chain laundering. AI is enabling us to transition risk management from a reactive approach to a predictive one,” Redbord asserted.

The post AI vs AI: How New Technologies Are Combating Sophisticated Crypto Scams appeared first on Cryptonews.